DATE

CATEGORY

READING TIME

AI Promises Versus Consulting Reality

Data breaks systems. Not sometimes. Almost always.

In the past few decades, I've watched countless technologies promise revolution while delivering disappointment. Today's AI wave feels eerily familiar. The breathless headlines proclaim a new era while organizations quietly struggle with implementation realities that vendors conveniently minimize.

Let's be clear about something fundamental: artificial intelligence is only as good as the data feeding it. This isn't a minor technical footnote. It's the difference between transformative success and expensive failure.

The Data Quality Crisis No One Discusses

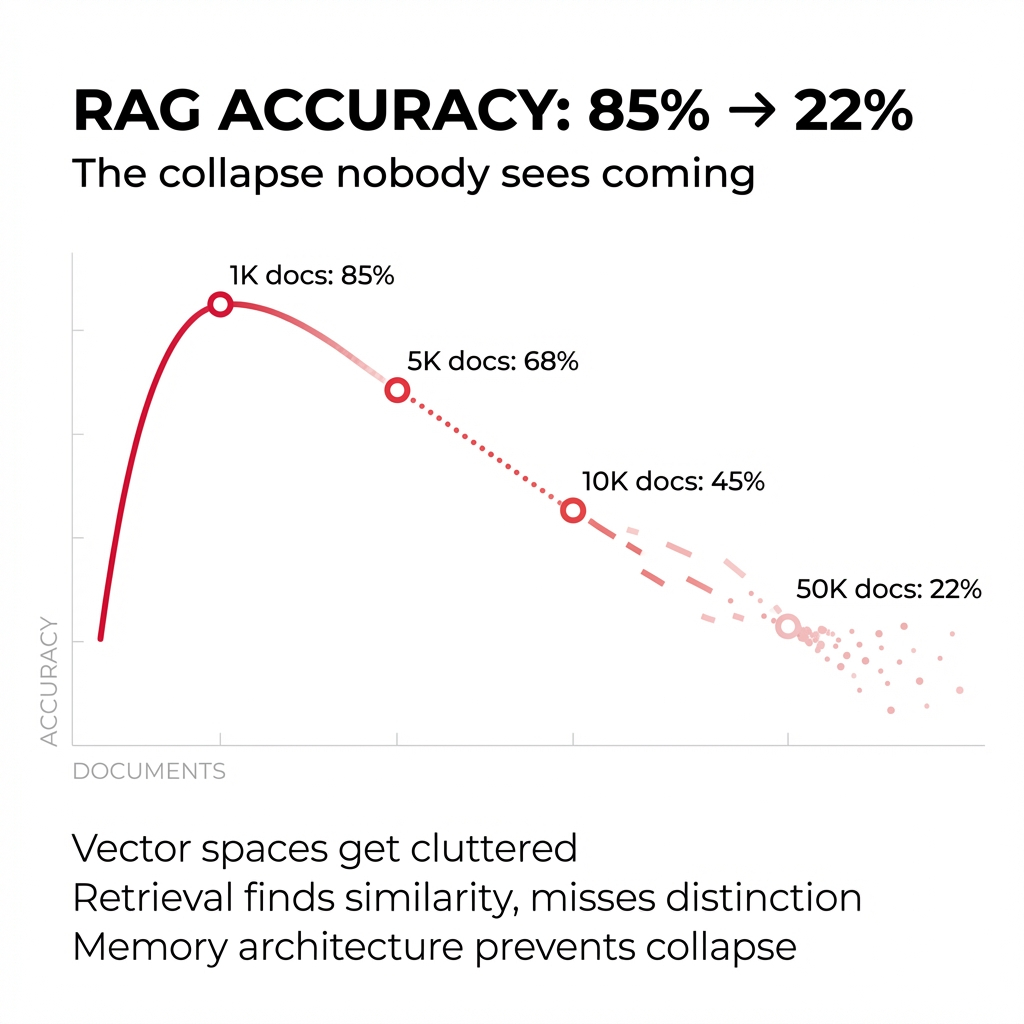

Traditional data warehousing approaches are collapsing under the weight of generative AI requirements. While conventional systems could tolerate certain inconsistencies, modern AI amplifies every data flaw exponentially. A small error becomes a cascading failure.

What worked for business intelligence reports fails spectacularly for real-time AI applications. The tolerance for error has shrunk to near zero. When a large language model hallucinates, it doesn't just produce a wrong number in a spreadsheet cell. It creates an entirely fabricated reality that appears convincingly authentic.

This represents a fundamental shift. We need operational-level data quality, not analytical-level quality. The difference isn't subtle.

Organizations rushing headlong into AI implementations without addressing foundational data infrastructure are building digital houses of cards. They're setting themselves up for spectacular, public failures that will ultimately damage both their operations and reputations.

The Capabilities Gap Remains Substantial

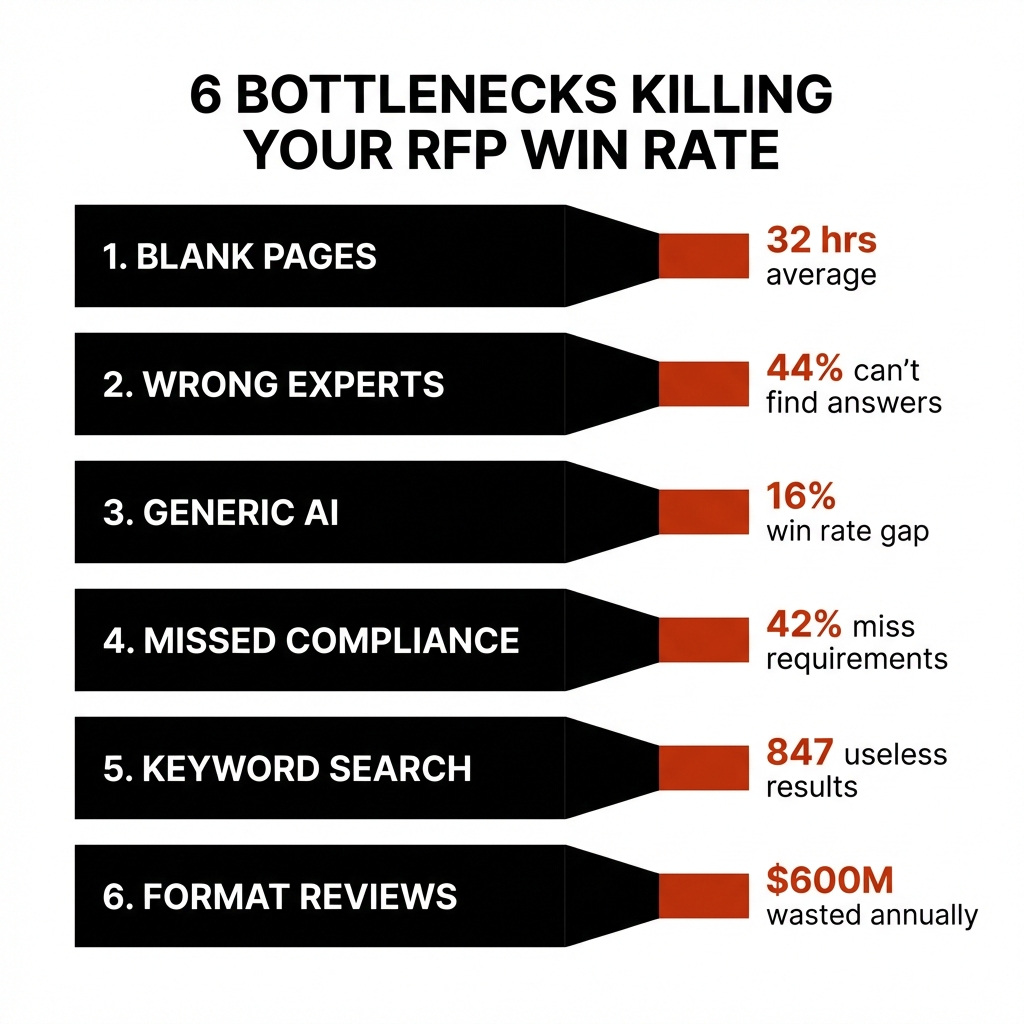

Recent benchmarks reveal uncomfortable truths about AI capabilities. Despite impressive advances, today's most sophisticated models still struggle with basic mathematical reasoning, logical consistency, and factual reliability.

The consulting world faces a particular challenge. Our value comes from complex problem-solving and nuanced analysis. Current AI excels at pattern recognition and information synthesis but falters precisely where consultants add their highest value: navigating ambiguity and creating novel solutions to unprecedented problems.

Human oversight isn't just recommended. It's required. Not as a temporary measure until AI "gets better," but as a fundamental component of responsible AI implementation. The notion that AI will autonomously handle complex knowledge work without human partnership represents dangerous magical thinking.

The Sovereignty Problem We Cannot Ignore

Beyond technical limitations lurks an equally troubling ethical dimension. Current AI development models extract value from public and private data sources without appropriate consent or compensation. This creates profound questions about data sovereignty.

Who owns the insights derived from organizational knowledge? When a model trained on proprietary data generates valuable outputs, where does ownership reside? These aren't abstract philosophical questions but concrete business concerns with significant financial implications.

The consulting industry particularly relies on intellectual property and proprietary methodologies. When these assets become training fodder for AI systems, we face existential questions about value creation and capture.

Equally concerning are the documented biases embedded in many AI systems. Models trained predominantly on data from privileged populations systematically underserve or actively harm marginalized communities. Without deliberate intervention, AI threatens to amplify existing inequalities rather than mitigate them.

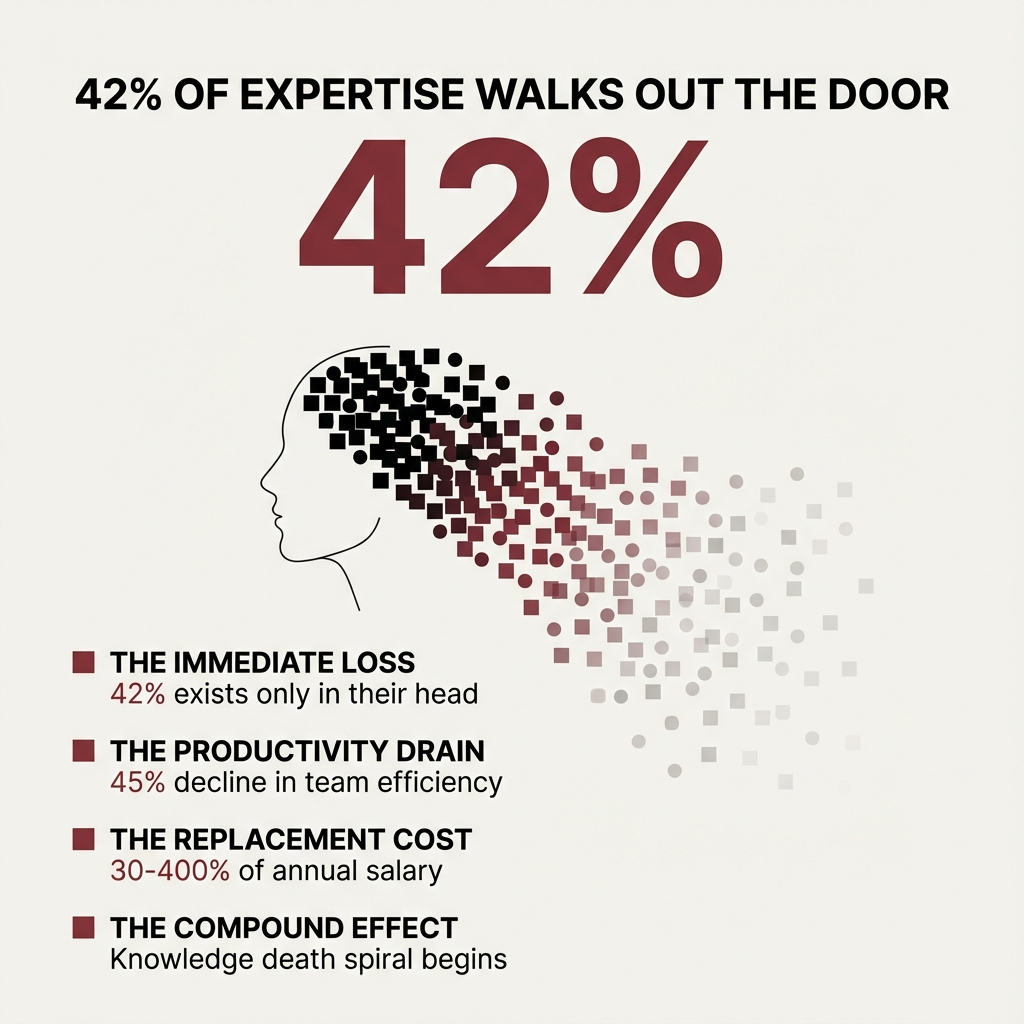

Workforce Transformation Requires Honesty

The conversation about AI's workforce impact swings between two unhelpful extremes: apocalyptic job loss predictions versus pollyannaish promises of painless transitions. Reality lies somewhere between.

Certain job functions will absolutely disappear. Others will transform substantially. New roles will emerge. The transition won't be automatic or painless.

Organizations must invest heavily in reskilling initiatives while being transparent about changing requirements. Workers deserve honesty about how their roles will evolve. Pretending nothing will change does a profound disservice to employees who need time and resources to adapt.

At Experio Labs, we focus on human-AI partnership models that enhance knowledge worker capabilities rather than replace them. This approach acknowledges both AI's strengths and its limitations while preserving the irreplaceable human elements of creativity, judgment, and ethical reasoning.

The Path Forward Requires Balance

Despite these challenges, I remain cautiously optimistic about AI's potential to transform consulting and professional services. The key lies in balancing technological enthusiasm with clear-eyed pragmatism.

Organizations that succeed with AI will build on three foundational elements:

First, they'll invest in robust data governance systems that ensure information quality at the operational level. Second, they'll develop transparent AI deployment frameworks with appropriate human oversight. Third, they'll create equitable models that properly recognize and compensate data contributors.

The AI revolution isn't inevitable. It's a choice we make through countless small decisions about implementation, governance, and ethics. Those choices will determine whether AI becomes a democratizing force or another mechanism for concentrating power and wealth.

As industry leaders, we bear responsibility for steering this technology toward its highest potential while mitigating its risks. That requires moving beyond the hype cycle to address fundamental questions about data quality, model limitations, and ethical deployment.

The greatest danger isn't AI itself but our collective tendency toward uncritical technological optimism. By approaching AI with appropriate skepticism and rigorous standards, we can harness its capabilities while avoiding its pitfalls.

The future remains unwritten. Let's ensure we craft it wisely.